Phew! There’s a lot to take in with data types, collation, precision, scale, length, and Unicode, and we’re just getting warmed up. This week’s post is over 2,000 words long!

Over the last three weeks, we’ve gone fairly deep into data types, and now we are going to see how they come into play with normalization.

If we go back to the first post in this series, I mentioned normalization, and then apparently I forgot about it in the next two posts. What you didn’t see is that I was talking about it all along.

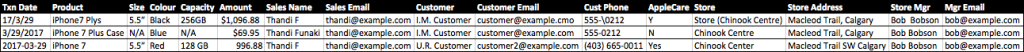

To normalize a database, we want to keep values as unique as possible in each table, and use the most appropriate data types. This week we have two customers, each buying an iPhone in the local Apple Store. The first will buy an iPhone with Apple Care, and a phone cover. The second will buy just an iPhone.

In a denormalized database, we could throw every detail for every transaction into a table with lots of columns (a wide table), with NVARCHAR(4000) or NVARCHAR(MAX) as the data type for every column. That makes things really easy from a design point of view, but, as we’ll see, it becomes a maintenance nightmare down the line.

To make it more interesting, let’s assume all values are entered by the salesperson on a tiny little screen attached to the credit card reader.

Click the image to see the whole thing full size.

Here are just a few things I noticed:

- Typos in some columns;

- E-mail addresses are entered incorrectly;

- Lots of repeated information, but with slight changes each time;

- Product Name and Product Capacity are not consistent;

- Product Size does not match Product Name;

- Dates are entered as text and are not consistent;

- Some columns aren’t populated for each row because they aren’t relevant;

- Amount columns are entered as text and are not consistent;

- Bob’s name has an extra space in the first row;

- Redundant columns.

Normalized data follows the principle of least data entry. If we add a new product, or customer, or employee, we add it in one place only, then in the application have a drop-down list from which we select that item.

Those drop-down lists, in the database context, might be called lookup tables, but to the database engine, they’re just ordinary tables with a Primary Key. It’s the primary key which makes it unique, and wherever we want to use that value elsewhere, we link to it, using a foreign key relationship (as I mentioned in the first post in this series, this is where the word “relational” comes into a relational database).

Going back to our example above, we will now use normalized tables and see why data types matter.

We can uniquely identify several things in the transactions above:

- Transactions

- Products

- Customers

- Salespersons

- Stores

Let’s start at the Stores table (when designing a new database, we often find ourselves working backwards). A store has a manager, that employs sales people, in order to sell stock to customers, in a transaction.

The Stores table would look something like this in SQL Server. Don’t worry for now about the weird syntax.

CREATE TABLE [Stores] (

[StoreID] SMALLINT NOT NULL IDENTITY(1,1),

[StoreCode] CHAR(10) NULL,

[StoreName] NVARCHAR(255) NOT NULL,

[Address] NVARCHAR(4000) NOT NULL,

[ManagerName] NVARCHAR(255) NOT NULL,

[ManagerEmail] NVARCHAR(255) NOT NULL,

CONSTRAINT [PK_Stores] PRIMARY KEY CLUSTERED ([StoreID] ASC)

);

Whoa, Nelly! This is a lot to take in. Notice that the last line has a semicolon to mark the end of the statement. We could just as easily put this all on one line of Transact-SQL code (Transact-SQL, or T-SQL, is the language that the SQL Server database engine understands). The important part is the semicolon that tells the engine where the end of the statement is.

The first line has the CREATE TABLE statement. This tells SQL Server that we intend to create a new table called Stores. The square brackets are a style convention, which we could have week-long discussions on: the bottom line is we can’t trust how humans name things, so SQL Server allows us to use almost any name as long as it’s quoted in square brackets.

After the table name is a round bracket, to tell SQL Server we’re about to start the list of columns we want to put into this table. Notice at the end, before the semicolon, is the matching closing bracket for this list of columns.

I want to point out the NULL, NOT NULL, and IDENTITY keywords here.

There’s an ongoing debate for primary keys, on whether to use existing values that have some meaning to the business (natural keys) for primary keys, or to create a meaningless synthetic identity column that automatically generates a number when a row is inserted into the table.

With retail stores, for example, a natural key might be a unique code that was created by the Sales department. For instance, all Canadian stores might start with the airport code of the nearest city, followed by seven alphanumeric characters. If the natural key is unique, it is a candidate for a primary key.

This is ultimately a business decision, but we must always keep in mind that if there is ever a possibility that the value won’t be unique, or is a long value that takes up a lot of bytes when using millions or billions of rows, an identity column might make more sense.

In this example, I have both: an identity value, generated automatically by SQL Server, and the Sales department’s store code in a separate, nullable column. Nullable means that I may decide to leave that value out for certain rows (e.g., online stores, or stores that don’t have a code yet), and the database engine won’t force me to populate it.

Database purists don’t like NULL values, but I’m a pragmatist, and if everything were perfect, I’d be out of work.

(Note: NULL is always unknown. Null does not mean “empty”. If a value should be empty, then use an empty string, or a zero if it’s a numeric field. Null means that we don’t know what should go into that column, which means, by association, that no two NULLs are equal. We can’t compare what we don’t know. This is a fundamental rule of data, which we should always keep in mind. We will address the concept of data equality later in this series.)

An identity column is specified by a data type (SMALLINT in our example, but could be TINYINT, SMALLINT, INT or BIGINT), followed by the starting value (the seed) and the incrementing value (the increment). The most common usage will be (1,1), starting at value 1 and incrementing by 1 whenever a new row is added.

The seed could also be the minimum value for the data type, though with Memory-Optimized tables, the seed must be 1.

(Note: if inserting a row with an identity column fails for any reason, or a row is deleted, that identity value will no longer be in the list of rows unless we force it to appear again using an IDENTITY_INSERT. We should never assign meaning to an identity value, and we should always expect gaps in the sequence. It is there to enforce uniqueness, and that is all.)

Another way to enforce uniqueness, is to combine two or more columns into a composite key. Should the business decide that the store’s code is not enough, and that a country code should be included in the table, then we could make a composite key out of two columns, which would be our primary key.

We don’t use a composite key in the Stores table though. The list of columns (lines 2 through 7) are as follows:

- StoreID (

SMALLINT): Start with the lowest number of possibilities when creating a Primary Key (remember that this isTINYINT). For stores though, there’s a chance we might have more than 255 locations worldwide, so we pick the next largest data type (SMALLINT, which only needs 2 bytes of storage). Starting atBIGINTmeans we’d need 8 bytes everywhere we refer to theStoreID, which is a significant waste of space. Even usingINThere, which is the default numeric type, would be wasteful. There can be 32,767 stores if we start counting at 1, and 65,535 in total if we start counting at the lowest value (-2^15, or -32,768).

- StoreCode (

CHAR(10)): When I provided the example of the store code above, I referred to an airport code followed by seven alphanumeric characters. Since airport codes are rendered in the Latin alphabet, there is no need to use Unicode here, irrespective of the country in question. This also makes the column fixed-width (3 characters for airport code + 7 characters for the alphanumeric string). We previously discussed whatNULLmeans.

- StoreName (

NVARCHAR(255)): I use Unicode here (theNinNVARCHAR). Because some of my store names might be in countries that use a different alphabet, I need to make sure I can save the correct name in the column. As for the length, my personal preference is to use 255 characters for things like names, but it’s not a requirement. Over the years, I’ve found that 100 is too short and 4000 is too long. Your convention could even be 500, or 1000. Remember, withVARCHARandNVARCHAR, the maximum length doesn’t matter as much because the space required to store this value is the actual length of the string (double for Unicode, because it needs two bytes per character), plus an overhead of 2 bytes (these extra bytes are used internally by SQL Server to know how long the string really is in each row). For shorter text values like names and addresses, I avoidMAXlength where possible becauseMAXmight force the row, or parts of the row, to be stored elsewhere in the database file, affecting performance.

- Address (

NVARCHAR(4000)): Addresses are weird things. They can be really short, or really long, depending on where we go. For instance, my uncle lives on the Isle of Wight (a small island just off the southern coast of England), and instead of a street number, his house has an actual name. There’s no way of knowing how long an address might be, so I go right up to the maximum length. Being Unicode, it’ll use two bytes per character for the value we put into here, plus two additional bytes for the SQL Server overhead of storing a variable length text value. 4000 characters ought to be enough space to store an address, at least in this example.

- ManagerName (

NVARCHAR(255)): This is my 255 character convention again, and you can pick your own. With names, as I mentioned, I prefer a maximum length of 255 characters, and I keep it Unicode to ensure we can capture names in non-Latin alphabets correctly.

- ManagerEmail (

NVARCHAR(512)): I’ve used a Unicode value here again because as of the last couple of years, we have the exciting prospect of Unicode domain names. Before that happened, we could get away withVARCHAR, but no longer. The length is 512, again a personal preference of mine because people can have seriously long email addresses. We might even decide to go to 4000 here.

(Note: I’ve made use of Unicode in this example because my example database will contain international values. This does not mean that your database for storing that Blu-ray collection in the basement must also be in Unicode. Just as we should pick the correct length for strings, we should also keep in mind the intended users of the database, and build for that audience. If we don’t need Unicode anywhere, we shouldn’t use it because it doubles the storage requirements for text values.)

The final part of this Stores table declaration is the Primary Key. Are you exhausted yet? I’m exhausted, and this is just the first of five tables.

Remember previously where I used the IDENTITY property to make sure the rows are unique? Well, there are two parts to uniqueness. IDENTITY does not automatically make a column a primary key. It is only there to provide an auto-incrementing value to ensure uniqueness when inserting new rows.

Enforcing uniqueness, however, is the job of the primary key, which is why we use the keyword CONSTRAINT. We are constraining the values in this column to be unique. This will prevent anyone from inserting a duplicate StoreID into the table.

Notice that there is also the CLUSTERED keyword in that line, which tells SQL Server to make the primary key the clustering key as well. Though this is not the time to discuss indexes yet, keep in mind that in almost all cases, a clustered index will be created on our primary key. When we create a table using the built-in table designer in SQL Server Management Studio, it does this for us.

With a clustering key, the data in the table is stored on disk in the logical order of the clustering key. Imagine the clustering key to be like a phone book, ordered by last name. Here, the sort order of the table is the artificially created StoreID. We will go into more detail in other post.

Next week we will continue our look at normalization, where I will complete the last four tables (in much less detail), and then explain how the relationships work. Stay tuned.

If you would like to provide any feedback or corrections, look me up on Twitter, at @bornsql.