A few years ago, I wrote that a CPU is “a hot mess of on-off switches.” There’s more to it than that when you get into the weeds of caches and cores and logic gates, but at the heart of every single one of the billions of transistors in a modern CPU, electricity either flows for a fixed length of time (a clock tick), or it does not.

If you look close enough, you’ll see that the electrical flow isn’t all that precise. The charge of the electrons may be affected by voltage, magnetic interference, the silicon semiconductor from which the CPU is made, or even background radiation from the universe outside. Those 1s or 0s are prone to flip back and forth at the slightest change in current, so we build in safeguards. In our code we might design checksums and run periodic consistency checks to make sure the information — known as data — we’re reading matches those checksums. This is also why you should buy RAM with error-correction built in.

The CPU can only work with small amounts of data at a time. When the CPU is done with that data, it puts it in memory. Depending on how that data is managed (the on-or-off bit of information is literally called a bit because computer scientists are great at naming things), it usually forms part of a byte, which by convention is eight bits. In other words, you get eight bits of information per byte, represented by a series of 1s (electricity flowed for a fixed period of time) and 0s (no electricity flowed for that fixed period of time).

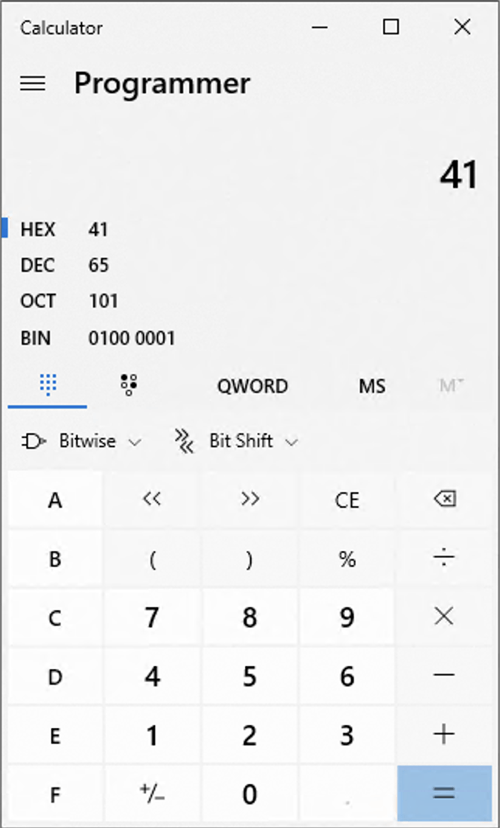

The uppercase “A” in the Latin alphabet, using the ASCII standard, is represented by the byte 01000001.

So, cool, this is binary. What does it actually mean? Computers can only count in 1s and 0s (on and off), and it runs out of values really quickly when writing them out compared to our decimal (base-10) system. And, like our counting system, when it runs out of values it has to go one column to the left and reset the right-most number back to 0 again.

How does the letter “A” get turned into 01000001? in ASCII, “A” is represented by the decimal value 65. To convert this to binary we have to convert it to a series of powers of 2, which when added together end up as 65.

When filling up the bits in a byte, we work from right to left, with each column represented by a power of two, starting with 0.

Column 8: 2 ^ 0 = 1 Column 7: 2 ^ 1 = 2 Column 6: 2 ^ 2 = 4 Column 5: 2 ^ 3 = 8 Column 4: 2 ^ 4 = 16 Column 3: 2 ^ 5 = 32 Column 2: 2 ^ 6 = 64 Column 1: 2 ^ 7 = 128

If we add those all together, the highest decimal number that a single byte of eight bits can store is 255. If we need to hold a value larger than that, we need two or more bytes. Four bytes together is 32 bits.

Let’s go back to our example. To figure out how to write 65 out in binary, we find the closest power of 2 that is smaller than the final amount. 2 to the power of 6 (written as 2 ^ 6) is equal to 64. If we look at our byte structure, this means Column 2 should be set to 1. Then, to get to 65 we just need to add one more bit, which goes at the end in Column 8.

If we want to reverse the value, we can calculate it out by adding all the columns which are set to 1, by their powers of 2 depending on the position (working from right to left again, but this isn’t required):

1 x 2 ^ 0 = 1 0 x 2 ^ 1 = 0 0 x 2 ^ 2 = 0 0 x 2 ^ 3 = 0 0 x 2 ^ 4 = 0 0 x 2 ^ 5 = 0 1 x 2 ^ 6 = 64 0 x 2 ^ 7 = 0 64 + 1 = 65

This is what we mean when we talk about binary. The information — the bit of data — can only be in two states. On, or off. 1, or 0. But as we saw in our very simple example, writing out eight bits every time is hard to read when you have a lot of bytes together. We have a few ways of representing the same byte in different ways to make it easier to read. We might put a space in the middle, like so: 0100 0001.

However, it’s far more efficient to represent this byte as a hexadecimal value 0x41. It’s still the same value: 01000001 in binary is exactly the same as 65 in decimal, and 41 in hex. As long as you remember that CPUs read that value as binary, you can describe that binary in a more concise way. Notice that I used a leading 0x when I wrote the value in hex. That’s a convention that tells other computer folks that the value is written using hexadecimal.

Like decimal which contains 10 distinct values including 0, hexadecimal contains 16 distinct values including 0. Here you can see how “ten” in hex (16) is a lot bigger than “ten” in decimal (10), and “ten” in binary (2).

0x00 - 00 - 00000000

0x01 - 01 - 00000001

0x02 - 02 - 00000010

0x03 - 03 - 00000011

0x04 - 04 - 00000100

0x05 - 05 - 00000101

0x06 - 06 - 00000110

0x07 - 07 - 00000111

0x08 - 08 - 00001000

0x09 - 09 - 00001001

0x0A - 10 - 00001010

0x0B - 11 - 00001011

0x0C - 12 - 00001100

0x0D - 13 - 00001101

0x0E - 14 - 00001110

0x0F - 15 - 00001111

0x10 - 16 - 00010000

The big advantage here is that 16 is a power of 2 as well, so it’s a natural fit for representing large series of 1s and 0s. Even better, because two bytes is 16 bits, you can store as much as two bytes of information in just two hexadecimal characters.

I’ll be the first to admit that doing any calculations with hexadecimal is complicated. If you feel like you need a calculator, I have some good news: all computers are calculators, and Windows 10 comes with a really nifty one that can do programmer calculations too.

Which brings us to something called endianness. I promise you that’s a real word. It originates in a book written in 1726 by Jonathan Swift, called Gulliver’s Travels, which was not about computers at all.

Computer scientists are not good at naming things. I lied earlier when I said they were. Naming things is hard. Which is why, when I first saw this word, I thought someone was having me on. In the book, when eating boiled eggs some people crack them open on the big end, and some crack them open on the little end. Big-endian versus little-endian. Yes, I know.

Intel CPU architecture deals with binary — in memory — using little endian. This means that the highest-order bit (the column with the highest power of 2) is on the right, and the lowest-order bit (the column with the smallest power of 2) is on the left. In other words, for those of us who write things down from left to right, it’s backwards. If you can’t stomach the term “little-endian,” you can also refer to this system as “byte-reversed.”

It’s worth mentioning here that the data on your hard drive is usually stored in the same order as you’d see it in memory, because it’s much easier to read data off your storage layer in the same sequence. That’s why your SQL Server data in each column is written in as little-endian as well, because SQL Server was written by Microsoft, and Microsoft wrote Windows which runs on Intel CPUs.

To summarize our lesson for this week: binary, like the CPU and memory it is manipulated by, is a hot mess of ones and zeros. But those ones and zeroes are hard for humans to read and write, so we use hexadecimal as a shorthand because hex can represent binary using fewer characters. When it comes to reading binary in memory or on disk, we use a hex editor to read (and sometimes write) those values, and with the aid of a programmer’s calculator figure out what those bytes mean. And, generally speaking, the bytes are written in reverse order because that’s how Intel did it in the 1970s and old habits die hard.

Hopefully this gives you a little more understanding when you read my posts about how different data types are stored on disk.

Leave your thoughts in the comments below.

Photo by Lucas Myers on Unsplash.

Seeing the OCT and DEC reminded me of the joke:

Why do programmers get Halloween and Christmas mixed up?

Because OCT 31 = DEC 25

I didn’t want to go too deep and explain octal as well, but I do appreciate this gag.

Hi – Thanks, good post, going back to basics…

Noticed broken link on the 2017 post. https://bornsql.ca/blog/so-like-what-is-a-byte/

https://bornsql.ca/2017/03/data-types-and-normalization/

Thank you. The link has been corrected.

Comments are closed.