When SQL Server 2019 was announced, it brought with it UTF-8 support. Also available in Azure SQL Database, UTF-8 is exciting if you have a legacy database and application that needs to support Unicode strings for globalization, but you can’t afford to double the storage requirement by converting all your CHAR and VARCHAR columns to NCHAR and NVARCHAR.

The idea is that you flip the collation on a regular CHAR or VARCHAR column to one of the new UTF-8 supported collations, and you’re done. When you insert a Unicode string it will only use Unicode for the characters it needs to, and you don’t get any silent conversion errors.

Unfortunately there’s a silent truncation problem, and it’s your fault! (Bear with me.)

When we define a string column in SQL Server, we assume that the number behind the CHAR or VARCHAR in parentheses is the number of characters in that string.

This has never been the case. That value refers to the number of bytes that you can store in the column.

But what about NVARCHAR(4000)?

This is even more confusing when you consider VARCHAR(8000) and NVARCHAR(4000). The maximum number of bytes available in a data page on SQL Server is 8,060 bytes (8KB less 96 bytes for the page header, and some space at the end for the slot array), so unless you switch to VARCHAR(MAX) or NVARCHAR(MAX) with their 2GB limit, you’re restricted to 8,000 bytes. While it is technically true that VARCHAR(8000) and NVARCHAR(4000) both store 8,000 bytes in order to fit on a single data page, Unicode needs two bytes per character, limiting you to 4,000 characters. This is possibly the root of the confusion.

Why this matters is that if you have a VARCHAR column which you then switch the collation from ANSI to UTF-8, you need to make sure you modify the column length as well, otherwise you’ll get truncation errors for Unicode strings that exceed the number of bytes available in the column length.

Show me some code

In this code sample, there are four text columns:

- Col1 is a standard Latin1_General_CI_AS string. CI stands for Case-Insensitive, and AS stands for Accent-Sensitive.

- Col2 is a Unicode (UTF-16) variation of the first column.

- Col3 is a standard Latin1_General_CI_AS string, but with the new UTF-8 collation. SC means Supplementary Characters, a feature introduced in SQL Server 2012 and leveraged by UTF-8.

- Col4 is identical to Col3, and is used to demonstrate the need for Unicode input.

USE test;

GO

DROP TABLE IF EXISTS SampleTable;

GO

CREATE TABLE SampleTable

(

ID INT IDENTITY(1,1) PRIMARY KEY,

Col1 VARCHAR(30) COLLATE Latin1_General_CI_AS,

Col2 NVARCHAR(30) COLLATE Latin1_General_CI_AS,

Col3 VARCHAR(30) COLLATE Latin1_General_100_CI_AS_SC_UTF8,

Col4 VARCHAR(30) COLLATE Latin1_General_100_CI_AS_SC_UTF8

);

INSERT INTO SampleTable

VALUES

('This is a test!', N'This is a test!', 'This is a test!', N'This is a test!');

INSERT INTO SampleTable

VALUES

('This is a test❗️', N'This is a test❗️', 'This is a test❗️', N'This is a test❗️');

INSERT INTO SampleTable

VALUES

('Парк Горького', N'Парк Горького', 'Парк Горького', N'Парк Горького');

SELECT

Col1,

DATALENGTH(Col1) AS Col1Length,

Col2,

DATALENGTH(Col2) AS Col2Length,

Col3,

DATALENGTH(Col3) AS Col3Length,

Col4,

DATALENGTH(Col4) AS Col4Length

FROM

SampleTable;

After creating the table we insert a single row of exactly the same string, “This is a test!” with an exclamation mark. Note however that the Col2 and Col4 strings are prefixed with the Unicode N, meaning that SQL Server should treat the input as a Unicode string.

Then we insert a second row with the same data, but this time we replace the exclamation mark with a the red emoji exclamation mark (U+2757).

The third row we insert is the name of my favourite Russian rock band from the mid-1990s, Gorky Park. I have purposefully chosen characters that only exist in the Cyrillic alphabet to demonstrate how Unicode (UTF-16, two bytes per character), and UTF-8 (as many bytes as needed to store Unicode characters, otherwise use Latin) work.

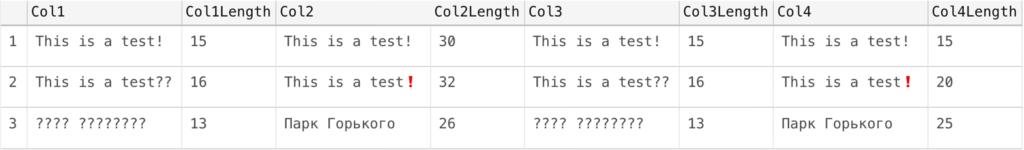

Finally, once these rows are inserted we have a SELECT statement which returns each column in each row, followed by the data length — how much storage was required in bytes to store these characters.

Summary

- Col1: as expected, the first column does not recognize any characters outside the ASCII range. Notice how the unknown characters are rendered as question marks.

- Col2: UTF-16 stores and renders the characters correctly, but at double the storage of Col1. It requires input to be defined as Unicode (the

Nbefore the string). - Col3: perhaps surprisingly, this column does exactly what the first one does, because the input string is not defined as possibly containing Unicode characters.

- Col4: this column stores and renders the characters correctly, only needing the number of bytes to store characters as needed. It also requires input to be defined as Unicode.

If you decide to use a UTF-8 collation in SQL Server 2019 or Azure SQL Database, please keep in mind that it’s only really useful if you remember to change your inputs to recognize Unicode strings. Additionally, if your columns are only sized according to the Latin alphabet you may have to increase the length of your columns as well.

UTF-8 definitely has its place, but I have also seen an argument for using NVARCHAR and page compression instead. Whenever testing new functionality in SQL Server it comes down to maintenance, documentation, and testing.

To read more about UTF-8 support, visit https://aka.ms/sqlutf8. You can also find some useful scripts on Microsoft’s GitHub page to help you avoid truncation when switching to UTF-8.

Leave your thoughts in the comments below.

Photo by Марьян Блан | @marjanblan on Unsplash.

Good overview of the feature. An emoji example really tells the story.

Regarding “Col3: perhaps surprisingly, this column does exactly what the first one does, because the input string is not defined as possibly containing Unicode characters” – non-Unicode string literals (the ones that don’t start with N”) are always encoded in the encoding that corresponds to the collation of the currently active database. So if the collation of the [test] database was either also UTF-8, or one of the Cyrillic ones (ANSI code page 1251), or even a Japanese XJIS (ANSI code page 832) – the value for Col3 would have come through undamaged. For emojis, of course, one would have to have a UTF-8 collation at the database level, as no ANSI page has those.

There may be a slight confusion about ASCII vs ANSI. ASCII covers the range of code points 0-127, and ANSI has first 0-127 values the same as ASCII, and the rest depends on the code page and may have Latin, Cyrillic, Hebrew, and other characters – but not all at once prior to UTF-8. So it’d be more correct to say “switch the collation from ANSI to UTF-8” rather than ASCII.

Technically correct, yes.

Comments are closed.