(Thanks to Erik Darling for reviewing this post. Check out his training materials.)

One of the bigger clichés in the data professional vocabulary (behind “it depends”) is that you always give SQL Server as much RAM as you can afford, because it’s going to use it. But what happens when SQL Server runs out of memory?

Recently a question appeared on my post about how the buffer pool works, asking the following (paraphrased):

What happens if a data page doesn’t exist in the buffer pool, and the buffer pool doesn’t have enough free space? Does the buffer pool use TempDB, [and] does TempDB put its dirty pages into the buffer pool?

This is an excellent question (thank you for asking!). I spent 30 minutes writing my reply and then figured it would make a good blog post this week if I fleshed it out a little.

Dirty pages and checkpoints

The question asks whether TempDB can stand in for the buffer pool in low memory conditions, and the simple answer is no. If you want to give the buffer pool more capacity, you either:

- reduce what’s being put into the buffer pool

- buy more physical memory

- turn on the Buffer Pool Extension

But this isn’t a simple question, so the simple answer doesn’t apply. The more correct answer is that SQL Server manages the buffer pool using a combination of database checkpoints for dirty pages, a FIFO (first-in, first-out) algorithm for clean pages, and TempDB on the side.

To refresh your memory (pun intended), the data file stores your data at rest. The buffer pool (i.e. a large chunk of your RAM) manages the data pages that are being read and changed. All changes are written to the transaction log before they are changed in memory, so that if something goes wrong and SQL Server has to recover from a crash, it just has to read the transaction log from the last known good point in time and roll back uncommitted changes, then roll forward committed changes. That “last known good point in time” is called a checkpoint.

Although SQL Server makes sure all changes are written to the transaction log file before they’re changed in memory, the database engine does not write all changes to the data file when they happen because that would really slow things down. An asynchronous process called the lazy writer does this in the background. It’s not as important because the changes are recorded in the transaction log file after all.

Besides, there’s that periodic checkpoint thing. Approximately every sixty seconds1This setting is configurable, SQL Server sweeps through the buffer pool and writes all dirty (modified) pages to the hard drive, as well as any transaction log still in memory. Checkpoints are a fundamental part of the crash recovery system.

What about TempDB?

This whole checkpoint thing seems to miss the point of the original question: when SQL Server is processing a bunch of data and runs out of space in the buffer pool to put it, what then?

In the case of large reads (for example a full clustered index scan or full table scan) where the table does not fit in memory, SQL Server looks at what the oldest clean (unmodified) pages are, and says goodbye to them. More accurately, it marks that part of memory as open for business so that a new data page can take its place. The Page Life Expectancy counter takes a hit as this page is flushed.

The query will “spill to disk” and take up temporary residence in TempDB only if it needs to do something that requires it (like sort operations), which in turn can also depend on the memory grant given to the query. Lots of things spill to disk all the time, and Erik Darling has written a bunch about when and how that happens.

The key thing here is that if it’s doing a read (SELECT), no data is being modified. There might be a bunch of new data pages in the buffer pool, but they are not dirty pages, so they’re free to leave at any time. If SQL Server crashes and TempDB is recreated, no data loss has occurred because Gordon didn’t get his report. Then every sixty seconds or so, the dirty pages are also flushed to disk for crash recovery purposes.

For large writes that don’t fit in the buffer pool, that’s where the transaction log comes in. Each query that performs a modification (INSERT, DELETE, UPDATE) is written to the log. More correctly, the data that will be affected by the query is written to the log file first, so that if the query needs to roll back for any reason, the log contains a transactionally-consistent version of the data. Then, the modification is made, writing to the transaction log. Once it has committed, the pages are changed in memory and then the checkpoint operation flushes the changes to disk.

If there is not enough space in memory, it will take longer to write the changes, and automatic checkpoint operations might increase during that time. Additionally, queries just won’t start until there’s enough space in the buffer pool, and you can monitor the Wait Statistics to see if this is happening.

In conclusion

If your server has memory pressure and Gordon really needs that report by Friday lunch, SQL Server will likely use space in TempDB to help that report run, but it doesn’t replace the buffer pool. Clean pages will be evicted as needed. If there are other people making changes in other parts of the database, those dirty pages (once they are committed) are flushed to disk during a checkpoint operation as well. You need as much memory as you can get.

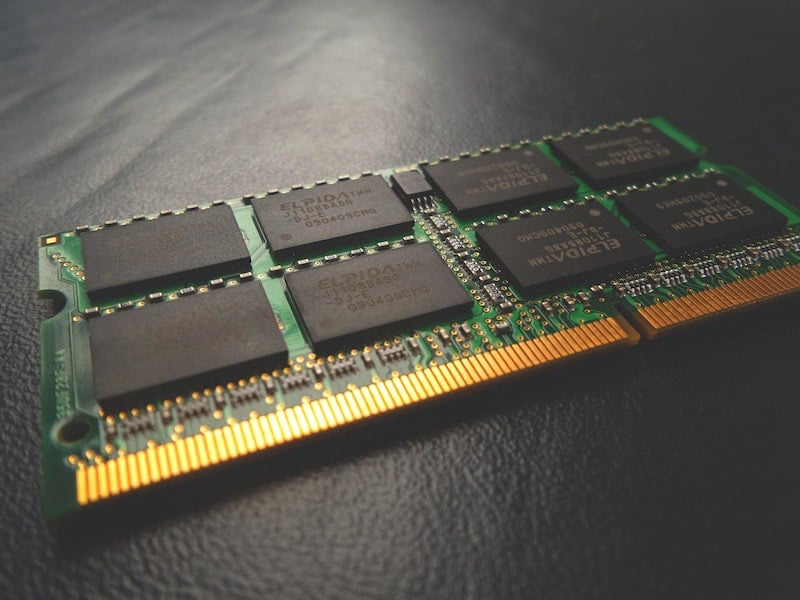

Photo by Possessed Photography on Unsplash

Hi Randolph, nice post :-).

There are some gotchas with tempdb and buffer pool, checkpoint, indirect checkpoint, lazywriter and eager writer on tempdb… yep, lot of things happening there.

I’ve wrote about it here https://www.red-gate.com/simple-talk/sql/performance/tempdb-heres-a-problem-you-didnt-know-you-have/ and I spoke about it with more details in my PASS Summit 2020 (every time I say PASS Summit I feel bad :-(, we’ll miss it) session https://www.youtube.com/watch?v=J-eDtFEg5sQ check this out.

Regards

Fabiano

Comments are closed.