Today we’ll begin taking a look at the buffer pool in SQL Server and how it’s normally used. Before we dive too deeply into the buffer pool, we need to remind ourselves of a few fundamentals of how computers process and store information, as that relates to how the buffer pool operates.

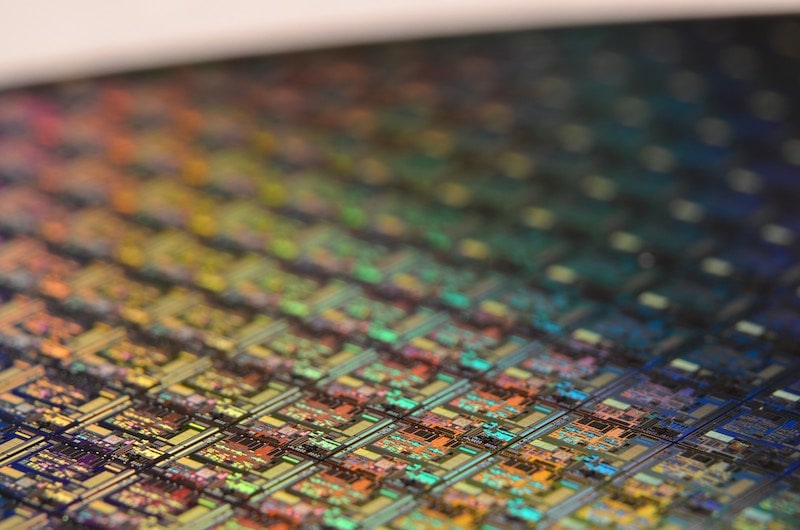

A computer is made up of four main components. The CPU, or central processing unit, is where computer code is executed and calculations take place. I’ve previously described it as a hot mess of on-off switches. The CPU cycles billions of times per second so it needs to keep track of stuff, which it does by storing it in different levels of memory. A modern CPU has three or four levels of cache, or memory, on the physical die. On any piece of electronics, the die is the surface that circuits are printed onto. In other words, we can think of the levels of cache as being built directly into the CPU.

This becomes important when considering how far electrons move on that CPU die. The fastest memory is that which is closest to the part of the CPU that does the calculations. This is called Level 1 (L1) cache. There are generally three other caches, called Levels 2, 3 and 4. On a CPU with more than one core (common these days), L3 and higher are shared between the cores. This makes them slower because of locking, but because they are still built into the CPU they are much closer to the action, so they are faster than the main memory of the computer outside the CPU, which is also known as RAM (random access memory), our second main component of a computer.

Those various CPU caches are way too small to store the contents of a responsive web page, so the CPU makes ample use of RAM instead; adding more RAM to a server will generally make SQL Server go faster. RAM is volatile, transient, and temporary. Many (many!) times every second, the capacitors inside the RAM chips storing those bits of data start to leak, and need a boost of electricity going through the RAM to keep the bit values set. In other words, once electricity stops flowing, the contents of RAM are lost.

The third main component of a computer is the storage layer. Many changes have occurred in this field in recent years, including the advent of non-volatile memory (also known as solid-state) and persisted memory (which uses a bit of magic behind the scenes to keep the most used data in memory, but backed by persisted storage). Despite these advances however, many people are still using spinning drives with a magnetic read/write head (known as magnetic storage). Backup tapes are also a form of magnetic storage, and before we assume that it has gone the way of the floppy disk drive, it might interest you to know that Amazon Glacier and Azure Cool Storage make use of boatloads of magnetic tape to achieve low-cost long-term storage.

The fourth main component in a computer is the network card. While a modern NIC (network interface card) has its own processor built in to reduce load on the computer’s CPU, there are some cases where network features need to be handled by the main CPU. The network is critical in modern infrastructure as it functions as the virtual circuit board of VMs and networked storage like SANs (storage area network).

Now that we’ve reminded ourselves of those fundamentals, let’s take a closer look at the buffer pool.

The buffer pool in SQL Server resides in the computer’s main memory (RAM). When the database engine requests a data page for reading or writing, it is assumed to be in the buffer pool. The buffer pool itself controls access between RAM and storage. If the data page that the database engine requests is not in RAM, a request is sent to the storage engine to retrieve that page. This may be storage directly attached to the system, or via a network interface card.

Once the data page is retrieved from the storage engine, it is loaded into the buffer pool as a byte-for-byte copy. By default since SQL Server 2005, every data page contains a tiny checksum in the page header (the first 96 bytes of the data page) which verifies that the data in memory is the same as it was when read from the storage layer. This is critical to avoid corruption. Along with ECC RAM (Error-Correcting Code memory), this reduces the possibility of corrupting data when processing it in the CPU.

As an example, let’s say we run a query that selects ten rows from a table. The query is parsed and compiled, and sent as a query plan to the CPU. The processor asks SQL Server for the data pages (either through clustered indexes, non-clustered indexes or heaps), which in turn are requested from the buffer pool. The buffer pool will check if those pages are in memory. If they are not, a page fault is issued and the storage engine is asked for the data page instead. Once the pages required to execute the query are in the buffer pool, the query can be run from the CPU, making use of the data in memory. These pages will remain in memory in the buffer pool unless the space is needed for something else. The notorious counter Page Life Expectancy is what measures the amount of time an average data page stays in the buffer pool.

Next time, we’ll continue by taking a look at the buffer pool extension and persisted memory.

Hi , thank you for your post, i have a question:

What happens

If page doesn’t exist in buffer pool and buffer pool doesn’t have enough free size?

Does buffer pool use tempdb? Or temp db puts its dirtypage in bufferpool?

Hi Fatemeh

You’re on the right track. I have a post coming on Wednesday which addresses your question. You will be able to see it here once it’s published.

Stay tuned!

Comments are closed.