(This is a more technical post than last week. If you are not familiar with SQL Server internals, I will not be upset if you skip it. Otherwise, let’s dive in.)

Last week I introduced the SQL Server 2016 feature known as System-Versioned Tables, or Temporal Tables. I explained how to convert an existing table to a temporal table and that it uses a methodology called effective dating.

This week, I’m going to go under the covers to see how they actually work, by reviewing the Transaction Log. How exciting!

As we know, every change to the state of a database is written to the transaction log before it is committed. (This does not necessarily apply to In-Memory OLTP tables, which can have delayed durability.)

This means that we can see all changes made to temporal tables, from their creation (or changing an existing table to a temporal table) to their modification.

I’m going to use some undocumented features in this post because I’m using a development environment, and I won’t be sad if I lose my database. I would not recommend running these commands in a production environment.

My development database is in Simple Recovery, which means that I can clear the Transaction Log with a simple CHECKPOINT command. Everything I see in the log after that will be whatever I ran after the checkpoint.

(Note: if we were using the Full Recovery Model instead, we would need to take a transaction log backup first to ensure that the log clears. This includes an implicit checkpoint.)

USE [BornSQL]; GO CHECKPOINT; GO -- Use the undocumented fn_dblog() function -- with NULL start and end transaction LSNs -- to return everything in the log. SELECT * FROM fn_dblog(NULL, NULL); GO

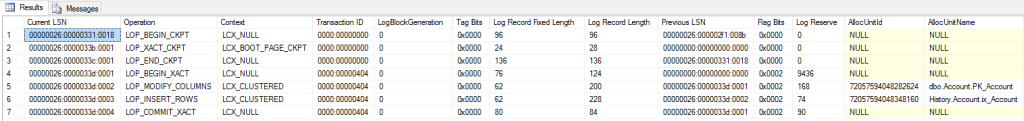

All we should see here are three rows:

LOP_BEGIN_CKPT |

Begins the Checkpoint operation |

LOP_XACT_CKPT |

Writes the Checkpoint information to the boot page of the database and commits the transaction |

LOP_END_CKPT |

Ends the Checkpoint operation |

Now we can start playing with our temporal tables to see how they work.

Remember last week when I said that adding the history table is a metadata operation? Let’s take a look and see if I was telling the truth, and what that actually means.

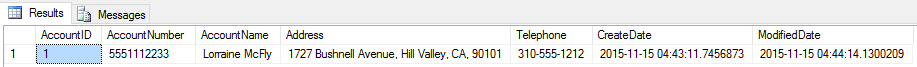

Assume we have our [dbo].[Account] table with a row of data for “Lorraine McFly”, which we now need to convert to a temporal table.

Recall that there are two stages to converting an existing table. We can review these separately and run a checkpoint in between, for simplicity’s sake.

Adding the Temporal Period Columns

In the first stage, we add the two DATETIME2(7) columns and set the temporal period.

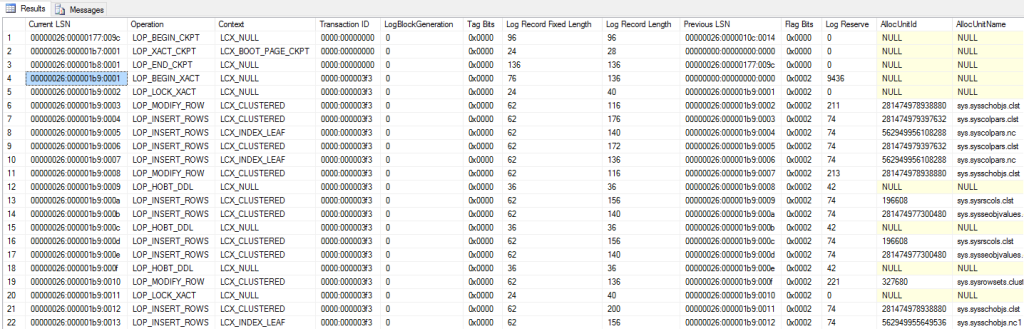

The fn_dblog() output gives us 32 rows in the transaction log (excluding the checkpoint information).

Jump over to the [AllocUnitName] column and have a look through those values. As the transaction log is showing us, these are metadata operations because they are modifying the system base tables only. No user data is being affected by this operation.

However, an exclusive lock is required on the [dbo].[Account] table to perform the change.

You may want to view the SQL Server 2016 Books Online section on system base tables to follow along.

LOP_BEGIN_XACT and LOP_LOCK_XACT are self-explanatory. Even system base tables need to be locked to add or modify rows. In row 6, we see LOP_MODIFY_ROW on the sys.sysschobjs.clst AllocUnitName. This refers to a clustered index change on an object in the database.

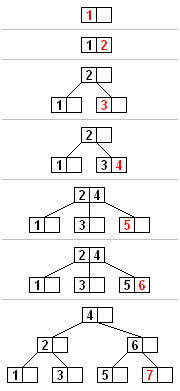

Tables can either be heaps or clustered indexes. With a clustered index, the index is the data, in the form of a B+tree (balanced tree).

This is what a HoBT is: Heap or B+tree. Paul Randal and several others can take you into a lot more detail if you are curious, but the important thing to note here is that HoBT generally refers to a table.

So when we see a modification to a clustered index in the transaction log, we can think of it as a table with a clustered index that is being modified, as opposed to a heap, which is a table with no clustered index.

(Note: a clustered index does not have to be on the Primary Key of a table. It is a matter of convenience that a Primary Key is created as a clustered index on SQL Server when using Management Studio. It is not a requirement.)

At rows 12 and 15, seen in the transaction log output above, we see two operations following a LOP_HOBT_DDL event. The second operation (see rows 14 and 17), the LOP_INSERT_ROWS operation against sys.sysseobjvalues.clst, would be where the temporal table START and END period properties are being written.

It is really that simple. All we have seen here is that two columns are added to the metadata, and their properties are assigned to a system base table. There is no magic in the structure. All that magic happens in the database engine code itself. And as we’ll see later in this post, there’s no magic in the code.

(Note: the sys.sysseobjvalues base table does not appear in the documentation. Keep in mind that this is based on CTP 3.0 of the product. It would not be the first time that I’ve found a typo in SQL Server either. This could be a mistake, or an undocumented table. Who knows? If I were to hazard a guess, I would think that the “se” in “sysseobjvalues” stands for “system extensions”.)

From rows 28 onward, seen in the log output above, we see that the indexes on the base tables that were modified need to be rebalanced according to the B+tree algorithm. Pretty cool, eh?

Converting to a Temporal Table

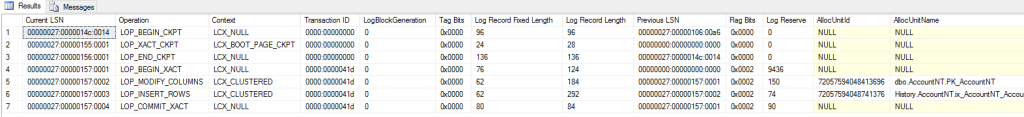

The second stage is creating and linking the history table, and this is the piece that I said was a metadata operation.

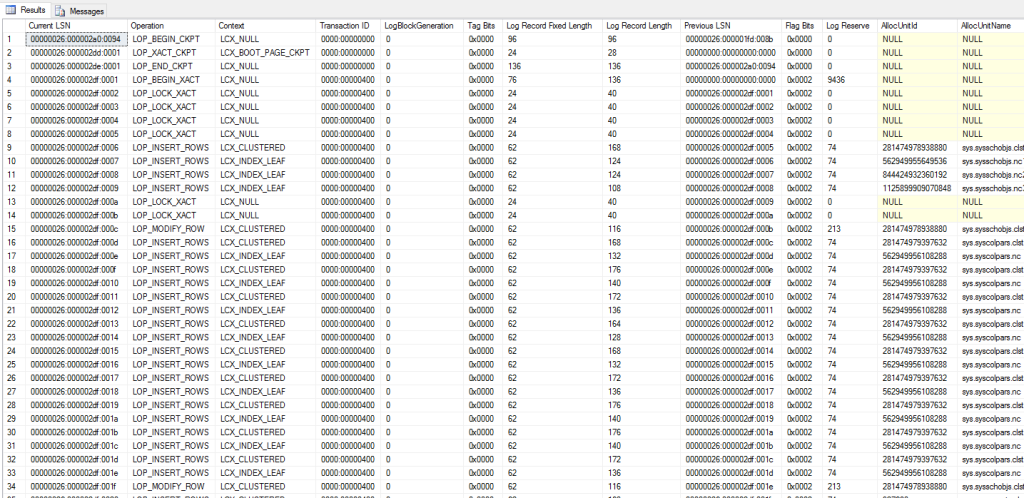

Reviewing the transaction log, we can see about 70 log records in the transaction log output.

Sometimes we might see LOP_EXPUNGE_ROWS and LOP_SET_BITS. Don’t be alarmed. These operations are part of the Ghost Cleanup phase. SQL Server is doing its own things behind the scenes, and we would see that in the log records from time to time.

There are a lot more locks in this step of the temporal table conversion, for obvious reasons. A new table has to be created. A new index has to be created. Indexes on the system base tables may have to be re-balanced.

Through all of this activity, one thing is clear: we would see exactly the same activity when a normal user table is created or modified.

Throughout both steps of this entire process, comprising approximately 100 log records, no data is modified in the user table. The only objects in [AllocUnitName] are system base tables. Converting to a temporal table is indeed a metadata operation. However, SQL Server must take an exclusive lock on the primary table, when adding the two PERIOD columns, and creating the history table.

What about modifying the data?

Where does that leave data modifications, then? Let’s update “Lorraine McFly” back to “Lorraine Baines”.

UPDATE [dbo].[Account]

SET [AccountName] = N'Lorraine Baines',

[ModifiedDate] = SYSUTCDATETIME()

WHERE [AccountID] = 1;

GO

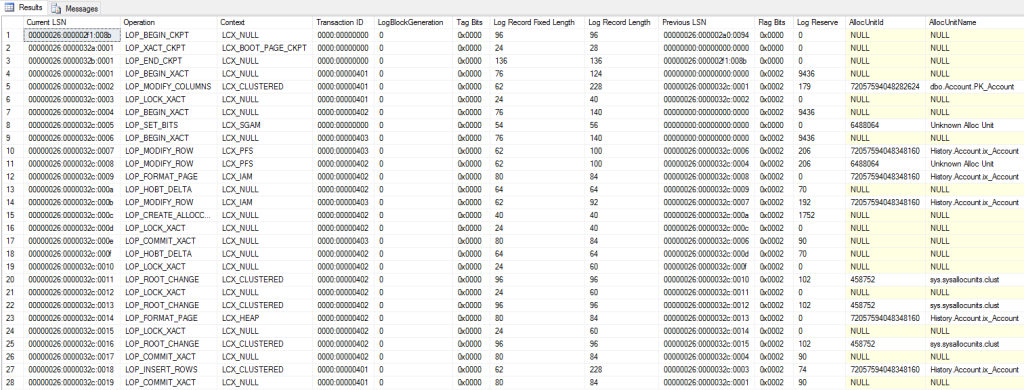

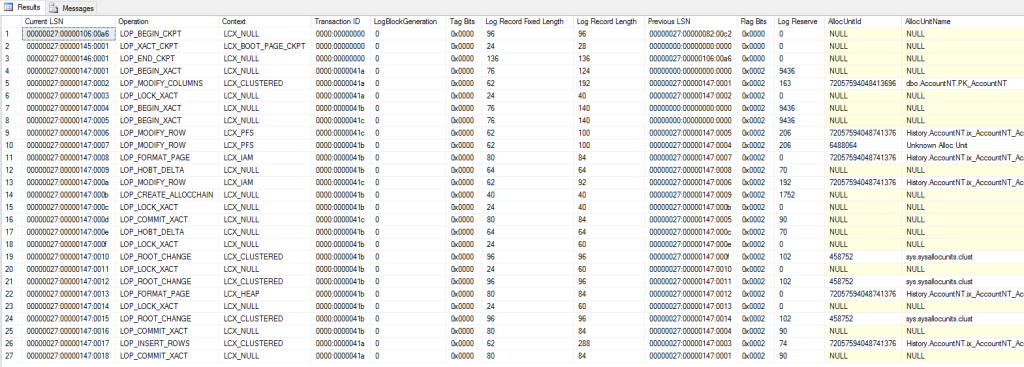

We can see 24 log records now. First is the obvious modification to the [dbo].[Account] table at row 5. Notice that this happens right at the top of the sequence, after an implicit transaction is started (row 4).

Now, because the [History].[Account] table was empty, SQL Server only allocated space when it was needed. The next few log records are the PFS (Page Free Space, though it’s a little more complicated than that) and IAM operations (Index Allocation Map, but also a little more complicated than that).

(Note: if you want to read more, Paul Randal goes into depth about GAM, SGAM, PFS and other allocation maps in this post.)

This can be a bit confusing, especially to readers who are not familiar with SQL Server internals. Also, we have a lot of log records relating to operations outside of temporal tables.

To make it easier, I’m going to run a checkpoint to clear the transaction log, then update Lorraine to her full name, “Lorraine Baines McFly”.

UPDATE [dbo].[Account]

SET [AccountName] = N'Lorraine Baines McFly',

[ModifiedDate] = SYSUTCDATETIME()

WHERE [AccountID] = 1;

GO

Reviewing the log records again, we only have 4 rows, excluding the checkpoint.

Row 4 and 5 are the same as we saw previously: a transaction is created, and the primary table’s row is changed. And as we would expect, these are followed by a LOP_INSERT_ROWS into the [History].[Account] table, followed by a LOP_COMMIT_XACT to commit the entire transaction.

If we had a stored procedure or update trigger, it would perform exactly the same steps, and we would wrap it in our own transaction.

Prove it!

Instead of taking my word for it, let’s compare this against an update trigger on a table which has not been converted to a temporal table.

Firstly, create our new Account table, with the same structure as the original [dbo].[Account] table that we created last week.

-- Non-temporal table

CREATE TABLE [dbo].[AccountNT] (

[AccountNTID] INT IDENTITY(1, 1) NOT NULL,

[AccountNumber] BIGINT NOT NULL,

[AccountName] NVARCHAR(255) NOT NULL,

[Address] NVARCHAR(1000) NOT NULL,

[Telephone] NVARCHAR(20) NOT NULL,

[CreateDate] DATETIME2(7) NOT NULL,

[ModifiedDate] DATETIME2(7) NOT NULL,

CONSTRAINT [PK_AccountNT] PRIMARY KEY CLUSTERED ([AccountNTID] ASC)

);

GO

ALTER TABLE [dbo].[AccountNT] ADD CONSTRAINT [DF_AccountNT_CreateDate] DEFAULT(SYSUTCDATETIME())

FOR [CreateDate];

GO

ALTER TABLE [dbo].[AccountNT] ADD CONSTRAINT [DF_AccountNT_ModifiedDate] DEFAULT(SYSUTCDATETIME())

FOR [ModifiedDate];

GO

-- Populate the table with our test data

INSERT INTO [dbo].[AccountNT] (

[AccountNumber],

[AccountName],

[Address],

[Telephone]

)

VALUES (

5551112233,

N'Lorraine Baines',

N'1727 Bushnell Avenue, Hill Valley, CA, 90101',

N'310-555-1212'

);

GO

Secondly, we manually create a history table with the same structure. Then we add a clustered index on the AccountNTID column. This cannot be unique because an AccountNTID may be logged more than once.

-- Manually-created history table

CREATE TABLE [History].[AccountNT] (

[AccountNTID] INT IDENTITY(1, 1) NOT NULL,

[AccountNumber] BIGINT NOT NULL,

[AccountName] NVARCHAR(255) NOT NULL,

[Address] NVARCHAR(1000) NOT NULL,

[Telephone] NVARCHAR(20) NOT NULL,

[CreateDate] DATETIME2(7) NOT NULL,

[ModifiedDate] DATETIME2(7) NOT NULL

);

GO

-- Add a clustered index on the AccountNTID column

CREATE CLUSTERED INDEX ix_AccountNT_AccountNTID ON [History].[AccountNT] (AccountNTID);

GO

Thirdly, we create an UPDATE and DELETE trigger to capture any changes to the table.

-- Update trigger

CREATE TRIGGER [TR_AccountNT_Update] ON [dbo].[AccountNT]

AFTER UPDATE, DELETE

AS

INSERT INTO [History].[AccountNT] (

[AccountNTID],

[AccountNumber],

[AccountName],

[Address],

[Telephone],

[CreateDate],

[ModifiedDate]

)

SELECT [AccountNTID],

[AccountNumber],

[AccountName],

[Address],

[Telephone],

[CreateDate],

[ModifiedDate]

FROM DELETED;

GO

Now, when we update a row in the [dbo].[AccountNT] table, the trigger will fire and insert a row into the history table using the DELETED.* information.

UPDATE [dbo].[AccountNT]

SET [AccountName] = N'Lorraine McFly',

[ModifiedDate] = SYSUTCDATETIME()

WHERE [AccountNTID] = 1;

GO

The IAM and PFS operations are polluting the log, so let’s do a second update.

UPDATE [dbo].[AccountNT] SET [AccountName] = N'Lorraine Baines McFly', [ModifiedDate] = SYSUTCDATETIME() WHERE [AccountNTID] = 1; GO

Look at the log records. They are identical to before. In fact, because a trigger is called in the same implicit transaction as the UPDATE statement, we didn’t even need to wrap the INSERT statement in a transaction.

What have we learned?

Temporal tables are doing exactly what an update or delete trigger (or stored procedure) would do, based on what we saw in the log records.

If you presently track data modifications using triggers and stored procedures, upgrading to SQL Server 2016 will save you a huge amount of time in creating and maintaining audit code.

Next time we will look at how temporal tables can be modified, once data has been written to them. Stay tuned.

Photo by Mari Helin on Unsplash.