- What’s new in SQL Server 2025 CTP 2.0by RandolphThree years ago, when the first public preview of SQL Server 2022 (CTP 2.0) was announced, I was a few months in at the SQL Docs team, and had very little to do with that release. Three years later, the team is slightly larger (we’re called Data Docs now), and I was much more… What’s new in SQL Server 2025 CTP 2.0

- The hell of Git line endings and the (not so) simple fix

by RandolphI wrote a stream-of-consciousness post a few months ago about what I do in my day job at Microsoft, working in the Database Docs team. Basically we spend most of our day in an obscure distributed version control system (DVCS) by the name of Git. Written over a weekend by the creator of Linux… The hell of Git line endings and the (not so) simple fix

by RandolphI wrote a stream-of-consciousness post a few months ago about what I do in my day job at Microsoft, working in the Database Docs team. Basically we spend most of our day in an obscure distributed version control system (DVCS) by the name of Git. Written over a weekend by the creator of Linux… The hell of Git line endings and the (not so) simple fix - Don’t run CHKDSK while SQL Server is runningby RandolphOn behalf of every database administrator everywhere, I implore you not to run CHKDSK on a system that has a live database installed and running. This includes, but is not limited to: SQL Server PostgreSQL MySQL MariaDB SQLite Oracle DB2 This also includes development and test environments. If you have any of these database… Don’t run CHKDSK while SQL Server is running

- How do you restore a SQL Server 2000 database in the year 2024?

by RandolphA discussion on LinkedIn led to this hypothetical “real world” question: Problem statement: I have a SQL Server 2000 database backup that I need to restore to a supported version of SQL Server (preferably SQL Server 2022). How do I do that? If this was my problem to solve, I would: Install SQL Server… How do you restore a SQL Server 2000 database in the year 2024?

by RandolphA discussion on LinkedIn led to this hypothetical “real world” question: Problem statement: I have a SQL Server 2000 database backup that I need to restore to a supported version of SQL Server (preferably SQL Server 2022). How do I do that? If this was my problem to solve, I would: Install SQL Server… How do you restore a SQL Server 2000 database in the year 2024? - What I actually do at Microsoft

by RandolphI started working at Microsoft in January 2022. I enjoy it. I even wrote a stream-of-consciousness post about it last year. A significant part of our job in the Database Docs team, is to resolve customer feedback issues about documentation. It doesn’t quite consume half of my time every month, but it might. Feedback… What I actually do at Microsoft

by RandolphI started working at Microsoft in January 2022. I enjoy it. I even wrote a stream-of-consciousness post about it last year. A significant part of our job in the Database Docs team, is to resolve customer feedback issues about documentation. It doesn’t quite consume half of my time every month, but it might. Feedback… What I actually do at Microsoft - I can no longer recommend Liquid Web for hostingby RandolphEDIT: 2024-04-30 I have closed my hosting company, and this site now lives on a Hetzner server in Germany. Original post continues below. I’ve had a really disappointing experience with the Liquid Web sales team, regarding the web server that hosts this blog post you’re reading right now. My disappointment is compounded by the… I can no longer recommend Liquid Web for hosting

- Hello Microsoft Entra ID, good bye Azure Active Directory

by RandolphA couple of months ago where I work, a major product started undergoing a rebrand. I don’t pretend to understand marketing folks, but a change this big needed to be well-coordinated. As of this writing, Azure Active Directory (Azure AD for short, and never AAD) is now known as Microsoft Entra ID. You can find out… Hello Microsoft Entra ID, good bye Azure Active Directory

by RandolphA couple of months ago where I work, a major product started undergoing a rebrand. I don’t pretend to understand marketing folks, but a change this big needed to be well-coordinated. As of this writing, Azure Active Directory (Azure AD for short, and never AAD) is now known as Microsoft Entra ID. You can find out… Hello Microsoft Entra ID, good bye Azure Active Directory - You should be running on SQL Server 2022

by RandolphIt’s me again with my apparently semi-annual blog post. This time we’re going to talk about which version of SQL Server you should be on, now that we’re at the end of 2023. Which version should I be on? You should be running your production environment on SQL Server 2022 with the latest cumulative… You should be running on SQL Server 2022

by RandolphIt’s me again with my apparently semi-annual blog post. This time we’re going to talk about which version of SQL Server you should be on, now that we’re at the end of 2023. Which version should I be on? You should be running your production environment on SQL Server 2022 with the latest cumulative… You should be running on SQL Server 2022 - I don’t blog anymore, but not because I work at Microsoft

by RandolphLast year I had a health scare that got my blood pressure at 200-and-something over 100-and-something, soon to be wired up to an IV drip, put through a CT scanner, and a couple of weeks later, poked and prodded from both ends while administered fentanyl. I’m in my mid-40s, and my father died when… I don’t blog anymore, but not because I work at Microsoft

by RandolphLast year I had a health scare that got my blood pressure at 200-and-something over 100-and-something, soon to be wired up to an IV drip, put through a CT scanner, and a couple of weeks later, poked and prodded from both ends while administered fentanyl. I’m in my mid-40s, and my father died when… I don’t blog anymore, but not because I work at Microsoft - Connecting to SQL Server Database Engine, simplifiedby RandolphOne of the advantages of being on the Database Docs team at Microsoft is that I get to work with amazing people, which means we can respond quickly to customer issues. Recently, a Microsoft customer who was doing an online training course to learn SQL Server, wondered what the tcp: means at the start… Connecting to SQL Server Database Engine, simplified

- On being a woman and a data professional

by RandolphThis is a list of links, in no particular order, that you should read in no particular order. But read all of the words at the end of these links. This is just ten minutes’ worth of looking for content to link to about a very common issue: A Woman in SQL 2023 – Deborah… On being a woman and a data professional

by RandolphThis is a list of links, in no particular order, that you should read in no particular order. But read all of the words at the end of these links. This is just ten minutes’ worth of looking for content to link to about a very common issue: A Woman in SQL 2023 – Deborah… On being a woman and a data professional - Patch your SQL Server instance today

by RandolphOn 14 February 2023, Microsoft released updates for all supported versions of SQL Server in the form of a General Distribution Release (GDR). A GDR is an out-of-band update that usually includes bug fixes and/or security patches. You can find out more about each release from aka.ms/sqlbuilds. In this case, the GDR fixes a… Patch your SQL Server instance today

by RandolphOn 14 February 2023, Microsoft released updates for all supported versions of SQL Server in the form of a General Distribution Release (GDR). A GDR is an out-of-band update that usually includes bug fixes and/or security patches. You can find out more about each release from aka.ms/sqlbuilds. In this case, the GDR fixes a… Patch your SQL Server instance today - Deadline for Microsoft MVP contributions is 20 March 2023

by RandolphSince changing to the new MVP renewal model, Microsoft MVPs have had until 31 March each year to provide the list of their annual contributions. For 2023, the deadline has been brought forward to 20 March 2023. If you always leave it to the last minute, know that the last minute is almost two… Deadline for Microsoft MVP contributions is 20 March 2023

by RandolphSince changing to the new MVP renewal model, Microsoft MVPs have had until 31 March each year to provide the list of their annual contributions. For 2023, the deadline has been brought forward to 20 March 2023. If you always leave it to the last minute, know that the last minute is almost two… Deadline for Microsoft MVP contributions is 20 March 2023 - You can run a SQL Server Docker container on Apple M1 and M2 Silicon

by RandolphPreviously the only way to run SQL Server on Apple Silicon was via QEMU emulation or Colima (hat tip to Anthony Nocentino). Docker released beta support today for Apple’s Rosetta 2 x86 emulation layer, which means you can run SQL Server on Apple M1 or Apple M2 silicon using this option. Download and install… You can run a SQL Server Docker container on Apple M1 and M2 Silicon

by RandolphPreviously the only way to run SQL Server on Apple Silicon was via QEMU emulation or Colima (hat tip to Anthony Nocentino). Docker released beta support today for Apple’s Rosetta 2 x86 emulation layer, which means you can run SQL Server on Apple M1 or Apple M2 silicon using this option. Download and install… You can run a SQL Server Docker container on Apple M1 and M2 Silicon - Max server memory recommendations are just a suggestion

by RandolphSome of you might know me because of the Max Server Memory Matrix, a chart I created based off a decade-old algorithm developed by Jonathan Kehayias. The chart has lived at bornsql.ca/memory for a while. There’s even a handy script on GitHub you can run. Check it out. I’ll wait. When you install SQL Server… Max server memory recommendations are just a suggestion

by RandolphSome of you might know me because of the Max Server Memory Matrix, a chart I created based off a decade-old algorithm developed by Jonathan Kehayias. The chart has lived at bornsql.ca/memory for a while. There’s even a handy script on GitHub you can run. Check it out. I’ll wait. When you install SQL Server… Max server memory recommendations are just a suggestion - Data is not immutable

by RandolphInformation — in the form of data — usually stored as binary 1s and 0s in an electronic data store, is not immutable. Even the hardiest data storage designed to last for a thousand years, etched cleverly into some physical material, is prone to data loss. As we have seen over the past few… Data is not immutable

by RandolphInformation — in the form of data — usually stored as binary 1s and 0s in an electronic data store, is not immutable. Even the hardiest data storage designed to last for a thousand years, etched cleverly into some physical material, is prone to data loss. As we have seen over the past few… Data is not immutable - Winding down

by RandolphNormally I write these posts on a Friday evening. Sometimes it’s later than that, but always before Monday so that my editor can give it some attention before I publish. On Friday though, I spent half the day in a hospital bed, hooked up to an IV and getting tested for several things to… Winding down

by RandolphNormally I write these posts on a Friday evening. Sometimes it’s later than that, but always before Monday so that my editor can give it some attention before I publish. On Friday though, I spent half the day in a hospital bed, hooked up to an IV and getting tested for several things to… Winding down - Just one more thing, an essay on troubleshooting

by RandolphOver the weekend I watched — for the first time in my life — an episode of the long-running ABC and NBC police show Columbo, starring Peter Falk. I originally knew him from the film The Princess Bride (fun fact: I acted in a film directed by Buttercup herself, Robin Wright). I’ve been hearing… Just one more thing, an essay on troubleshooting

by RandolphOver the weekend I watched — for the first time in my life — an episode of the long-running ABC and NBC police show Columbo, starring Peter Falk. I originally knew him from the film The Princess Bride (fun fact: I acted in a film directed by Buttercup herself, Robin Wright). I’ve been hearing… Just one more thing, an essay on troubleshooting - Choco upgrade, y’all

by RandolphChocolatey is a package manager for Windows, like the built-in package managers on Linux, and third-party ones on macOS like Homebrew and MacPorts. The idea is this: when you’re setting up a machine, you don’t want to have to think about which applications you need installed to get up and running. This could be… Choco upgrade, y’all

by RandolphChocolatey is a package manager for Windows, like the built-in package managers on Linux, and third-party ones on macOS like Homebrew and MacPorts. The idea is this: when you’re setting up a machine, you don’t want to have to think about which applications you need installed to get up and running. This could be… Choco upgrade, y’all - If I can’t use PWDENCRYPT, how am I supposed to use HASHBYTES?

by RandolphFor this week, here is a short post about reinventing the wheel. An interesting conversation happened on Twitter where Dave Dustin asked: “Does anybody have an example of using HASHBYTES() to replace PWDENCRYPT() per the documentation that the latter is deprecated?” – Dave Dustin Dave is referring to the Microsoft Docs page for PWDENCRYPT(),… If I can’t use PWDENCRYPT, how am I supposed to use HASHBYTES?

by RandolphFor this week, here is a short post about reinventing the wheel. An interesting conversation happened on Twitter where Dave Dustin asked: “Does anybody have an example of using HASHBYTES() to replace PWDENCRYPT() per the documentation that the latter is deprecated?” – Dave Dustin Dave is referring to the Microsoft Docs page for PWDENCRYPT(),… If I can’t use PWDENCRYPT, how am I supposed to use HASHBYTES? - On bias

by RandolphLast week saw the second month of my employment at Microsoft. I admit that I’m enjoying my job, and I can’t deny that the reduced amount of time I’ve spent on social media has helped. Last week also saw the second user group meeting of 2022 for the Calgary Data User Group, which I… On bias

by RandolphLast week saw the second month of my employment at Microsoft. I admit that I’m enjoying my job, and I can’t deny that the reduced amount of time I’ve spent on social media has helped. Last week also saw the second user group meeting of 2022 for the Calgary Data User Group, which I… On bias - A new book is on its way

by RandolphI am thrilled to announce that Microsoft Press (Pearson) has agreed to let us do another Inside Out book this year. This news is so fresh I haven’t even gotten the advance yet! The working title is SQL Server 2022 Administration Inside Out, and we have most of the same cast and crew working… A new book is on its way

by RandolphI am thrilled to announce that Microsoft Press (Pearson) has agreed to let us do another Inside Out book this year. This news is so fresh I haven’t even gotten the advance yet! The working title is SQL Server 2022 Administration Inside Out, and we have most of the same cast and crew working… A new book is on its way - You can’t secure your network with spite

by RandolphI wrote a post a couple weeks ago about not changing port 1433 for security reasons. I received this comment, which is not visible on that page because it warrants a lengthy response. I have redacted the name of the commenter. I disagree. Hundreds companies around the world were victims of ransomware attack even… You can’t secure your network with spite

by RandolphI wrote a post a couple weeks ago about not changing port 1433 for security reasons. I received this comment, which is not visible on that page because it warrants a lengthy response. I have redacted the name of the commenter. I disagree. Hundreds companies around the world were victims of ransomware attack even… You can’t secure your network with spite - How do I merge a small part of a Git repo into another repo?

by RandolphAt my new day job, one of the things we want to do is migrate a portion of a really large Git repository (over 20GB) which I’ll call LargeRepo, into a much smaller repository (< 2GB) which I’ll call SmallRepo, because when we commit new files to the larger one, the build process takes… How do I merge a small part of a Git repo into another repo?

by RandolphAt my new day job, one of the things we want to do is migrate a portion of a really large Git repository (over 20GB) which I’ll call LargeRepo, into a much smaller repository (< 2GB) which I’ll call SmallRepo, because when we commit new files to the larger one, the build process takes… How do I merge a small part of a Git repo into another repo? - Don’t change your default SQL Server port for security reasons

by RandolphSince we’re on a recent theme of revising long-held best practices that are not, here’s a timely one for you: Don’t change your default SQL Server port for security reasons. In SQL Server Configuration Manager, you can set a custom port for your SQL Server instance. If you’re running named instances, you might even… Don’t change your default SQL Server port for security reasons

by RandolphSince we’re on a recent theme of revising long-held best practices that are not, here’s a timely one for you: Don’t change your default SQL Server port for security reasons. In SQL Server Configuration Manager, you can set a custom port for your SQL Server instance. If you’re running named instances, you might even… Don’t change your default SQL Server port for security reasons - The denial-of-service attack is coming from inside the house

by RandolphA short post this week. While I was helping some friends recently, we experienced a curious thing where as soon as an application was started up, it was immediately followed by a denial-of-service attack that played out in the most mundane way you can imagine. The application itself is an API that is replacing… The denial-of-service attack is coming from inside the house

by RandolphA short post this week. While I was helping some friends recently, we experienced a curious thing where as soon as an application was started up, it was immediately followed by a denial-of-service attack that played out in the most mundane way you can imagine. The application itself is an API that is replacing… The denial-of-service attack is coming from inside the house - Checking accessibility in your day-to-day work

by RandolphLast year I wrote a series of posts about accessibility as it relates to presentations, and one aspect which I didn’t cover is within the documents themselves. We create slides with wonderful typeface choices, clear text, minimal animations, good colour choices, and so on, but what happens when we need to share those documents… Checking accessibility in your day-to-day work

by RandolphLast year I wrote a series of posts about accessibility as it relates to presentations, and one aspect which I didn’t cover is within the documents themselves. We create slides with wonderful typeface choices, clear text, minimal animations, good colour choices, and so on, but what happens when we need to share those documents… Checking accessibility in your day-to-day work - Database Projects 101 in Azure Data Studio (Calgary Data User Group)

by RandolphOn Wednesday February 23rd, 2022, the Calgary Data User Group will be hosting our first user group session of the year, featuring Warwick Rudd. The topic is an introduction to Database Projects in Azure Data Studio, the cross-platform tool for connecting to SQL Server and Azure SQL Database. Here is the abstract: Database Projects… Database Projects 101 in Azure Data Studio (Calgary Data User Group)

by RandolphOn Wednesday February 23rd, 2022, the Calgary Data User Group will be hosting our first user group session of the year, featuring Warwick Rudd. The topic is an introduction to Database Projects in Azure Data Studio, the cross-platform tool for connecting to SQL Server and Azure SQL Database. Here is the abstract: Database Projects… Database Projects 101 in Azure Data Studio (Calgary Data User Group) - Don’t optimize for ad hoc workloads as a best practice

by Randolph(This post was co-authored by Erik Darling.) The more things stay the same, the more they change… No, that’s not a mistake. In fact, it’s a reference to long-held belief systems that don’t take new information into account, and how confirmation bias is not a good motivator for recommending best practices. Let’s talk about… Don’t optimize for ad hoc workloads as a best practice

by Randolph(This post was co-authored by Erik Darling.) The more things stay the same, the more they change… No, that’s not a mistake. In fact, it’s a reference to long-held belief systems that don’t take new information into account, and how confirmation bias is not a good motivator for recommending best practices. Let’s talk about… Don’t optimize for ad hoc workloads as a best practice - Join me in Germany in June

by RandolphI have been selected to speak at the DataGrillen conference later this year. I will be presenting my session How Does SQL Server Store That Data Type?, which I debuted during last year’s EightKB online conference. This is the abstract for the talk, which will be presented on Thursday June 2nd, 2022, from 1:15 pm – 2:15 pm… Join me in Germany in June

by RandolphI have been selected to speak at the DataGrillen conference later this year. I will be presenting my session How Does SQL Server Store That Data Type?, which I debuted during last year’s EightKB online conference. This is the abstract for the talk, which will be presented on Thursday June 2nd, 2022, from 1:15 pm – 2:15 pm… Join me in Germany in June - A new chapter begins

by RandolphIn 2012 when I originally founded Born SQL, I never imagined I would find myself a five-time recipient of the Microsoft Data Platform MVP award, let alone be offered a job to work for Microsoft alongside amazing people including William Assaf and Kendra Little. It is a bittersweet moment for me to hang up my… A new chapter begins

by RandolphIn 2012 when I originally founded Born SQL, I never imagined I would find myself a five-time recipient of the Microsoft Data Platform MVP award, let alone be offered a job to work for Microsoft alongside amazing people including William Assaf and Kendra Little. It is a bittersweet moment for me to hang up my… A new chapter begins - SQL Server 2019 on Apple Silicon redux: it actually works

by RandolphEdited on 12 January 2023: You can run a SQL Server Docker container on Apple M1 and M2 Silicon Last month I wrote a blog post suggesting that it was not possible to get SQL Server 2019 running on Apple Silicon. I hedged my statement by saying you could get Azure SQL Database Edge running… SQL Server 2019 on Apple Silicon redux: it actually works

by RandolphEdited on 12 January 2023: You can run a SQL Server Docker container on Apple M1 and M2 Silicon Last month I wrote a blog post suggesting that it was not possible to get SQL Server 2019 running on Apple Silicon. I hedged my statement by saying you could get Azure SQL Database Edge running… SQL Server 2019 on Apple Silicon redux: it actually works - One year later: my look back on the PASS organization

by RandolphMy good friend and talented singer Rob Volk reminded me recently that I had promised to write about my involvement with PASS at the end of its life, and I remember saying out of respect that I’d wait a year before doing so. Time flies. To recap, the few remaining assets of the PASS organization… One year later: my look back on the PASS organization

by RandolphMy good friend and talented singer Rob Volk reminded me recently that I had promised to write about my involvement with PASS at the end of its life, and I remember saying out of respect that I’d wait a year before doing so. Time flies. To recap, the few remaining assets of the PASS organization… One year later: my look back on the PASS organization - And that’s a wrap

by RandolphIt’s the final week of 2021, a year that was both twice as long and half the length of 2020. If you can, please make sure you are vaccinated and boosted. It doesn’t prevent infection, but it does make the experience less awful. If you’re a recent recipient of COVID-19, please do your level… And that’s a wrap

by RandolphIt’s the final week of 2021, a year that was both twice as long and half the length of 2020. If you can, please make sure you are vaccinated and boosted. It doesn’t prevent infection, but it does make the experience less awful. If you’re a recent recipient of COVID-19, please do your level… And that’s a wrap - On the nature of constant change

by RandolphRecently my spouse and I travelled to South Africa (yes, I know there’s a pandemic on) to deal with a gloomy family matter that required in-person interaction. Being an adult means dealing with things that other people can’t do or don’t want to do. Literally hours after we arrived, hot on the tail of… On the nature of constant change

by RandolphRecently my spouse and I travelled to South Africa (yes, I know there’s a pandemic on) to deal with a gloomy family matter that required in-person interaction. Being an adult means dealing with things that other people can’t do or don’t want to do. Literally hours after we arrived, hot on the tail of… On the nature of constant change - You can’t run SQL Server on Apple Silicon, and it sucks

by RandolphEdited on 12 January 2023: You can run a SQL Server Docker container on Apple M1 and M2 Silicon Prior to 2017, the only way to get SQL Server running on a Mac was through a virtual machine running some version of Windows that supported some version of SQL Server. Then SQL Server 2017 —… You can’t run SQL Server on Apple Silicon, and it sucks

by RandolphEdited on 12 January 2023: You can run a SQL Server Docker container on Apple M1 and M2 Silicon Prior to 2017, the only way to get SQL Server running on a Mac was through a virtual machine running some version of Windows that supported some version of SQL Server. Then SQL Server 2017 —… You can’t run SQL Server on Apple Silicon, and it sucks - Your next step in the cloud

by RandolphOver the last nine months I’ve presented (virtually) eleven times on a variety of topics relating to SQL Server and the Microsoft Data Platform. Long-time readers will know I have some experience as a high school teacher and college lecturer, and I’ve co-authored three technical books on SQL Server. My most popular session at… Your next step in the cloud

by RandolphOver the last nine months I’ve presented (virtually) eleven times on a variety of topics relating to SQL Server and the Microsoft Data Platform. Long-time readers will know I have some experience as a high school teacher and college lecturer, and I’ve co-authored three technical books on SQL Server. My most popular session at… Your next step in the cloud - Be careful with table updates

by RandolphThe dire warning in the subject line is not meant to scare you. Rather, it is advice that is going to be useful to those of us who need to audit changes to a database. This is even more important now as SQL Server 2022 approaches, with its new system-versioned ledger tables which record… Be careful with table updates

by RandolphThe dire warning in the subject line is not meant to scare you. Rather, it is advice that is going to be useful to those of us who need to audit changes to a database. This is even more important now as SQL Server 2022 approaches, with its new system-versioned ledger tables which record… Be careful with table updates - Calgary Data User Group: Big Data Clusters with Bob Pusateri

by RandolphThis is one of those shameless plugs I’m allowed to do from time to time to promote my user group here in Calgary. Tonight, starting 5pm Mountain time, Bob Pusateri (blog | Twitter) will be presenting an hour-long session about Big Data Clusters, a very interesting feature that was introduced with SQL Server 2019.… Calgary Data User Group: Big Data Clusters with Bob Pusateri

by RandolphThis is one of those shameless plugs I’m allowed to do from time to time to promote my user group here in Calgary. Tonight, starting 5pm Mountain time, Bob Pusateri (blog | Twitter) will be presenting an hour-long session about Big Data Clusters, a very interesting feature that was introduced with SQL Server 2019.… Calgary Data User Group: Big Data Clusters with Bob Pusateri - Days of future past

by RandolphThere’s a lot going on in the world today. It feels like there’s too much for us to think about. Speaking for myself, I’m worried about the environment first and foremost, followed closely by folks who refuse to wear masks that cover their noses and mouths, and finally by the apparent erosion of human… Days of future past

by RandolphThere’s a lot going on in the world today. It feels like there’s too much for us to think about. Speaking for myself, I’m worried about the environment first and foremost, followed closely by folks who refuse to wear masks that cover their noses and mouths, and finally by the apparent erosion of human… Days of future past - Table Valued Parameters and Dapper in .NET Core

by RandolphA customer I’ve been working with for a while now has a monolithic ASP.NET MVC web application which we are porting to .NET Core 3.1 (and then almost immediately to .NET 6). One of our biggest changes was getting rid of Entity Framework and replacing it with Dapper, because performance is a feature. To… Table Valued Parameters and Dapper in .NET Core

by RandolphA customer I’ve been working with for a while now has a monolithic ASP.NET MVC web application which we are porting to .NET Core 3.1 (and then almost immediately to .NET 6). One of our biggest changes was getting rid of Entity Framework and replacing it with Dapper, because performance is a feature. To… Table Valued Parameters and Dapper in .NET Core - SQL Server 2022 announced

by RandolphSQL Server 2022 was announced yesterday at Microsoft Ignite, and it’s going to be a big one. Building on a lot of work in the Azure SQL space, SQL Server 2022 looks to include some cool features including (but not limited to): failover to Managed Instance (and back again) enhancements to intelligent query processing… SQL Server 2022 announced

by RandolphSQL Server 2022 was announced yesterday at Microsoft Ignite, and it’s going to be a big one. Building on a lot of work in the Azure SQL space, SQL Server 2022 looks to include some cool features including (but not limited to): failover to Managed Instance (and back again) enhancements to intelligent query processing… SQL Server 2022 announced - You don’t need a blockchain

by RandolphAfter writing several posts about a neat feature in Azure SQL called system-versioned ledger tables, it reminded me about something I’ve wanted to say for a number of years now, outside of snarky tweets. Here goes: You don’t need a blockchain. In the vast majority of use cases, you need a properly audited relational… You don’t need a blockchain

by RandolphAfter writing several posts about a neat feature in Azure SQL called system-versioned ledger tables, it reminded me about something I’ve wanted to say for a number of years now, outside of snarky tweets. Here goes: You don’t need a blockchain. In the vast majority of use cases, you need a properly audited relational… You don’t need a blockchain - Join me next week at my first GroupBy session

by Randolph2021 has been the year people want to learn about Temporal Tables, it seems. Not only am I speaking at the SQL Trail conference next week, but I was also selected to speak at the upcoming GroupBy conference on Tuesday October 26, 2021. Yes, that’s also next week! Some facts about next week’s talks:… Join me next week at my first GroupBy session

by Randolph2021 has been the year people want to learn about Temporal Tables, it seems. Not only am I speaking at the SQL Trail conference next week, but I was also selected to speak at the upcoming GroupBy conference on Tuesday October 26, 2021. Yes, that’s also next week! Some facts about next week’s talks:… Join me next week at my first GroupBy session - System-versioned ledger tables: things you can’t do

by RandolphThis is the third post in the series about system-versioned ledger tables, a new feature introduced in Azure SQL Database. You can read Part 1 and Part 2 if you haven’t already. Every choice we make is a trade-off. New features have limitations, and ledger tables are no exception. Some of these limitations are… System-versioned ledger tables: things you can’t do

by RandolphThis is the third post in the series about system-versioned ledger tables, a new feature introduced in Azure SQL Database. You can read Part 1 and Part 2 if you haven’t already. Every choice we make is a trade-off. New features have limitations, and ledger tables are no exception. Some of these limitations are… System-versioned ledger tables: things you can’t do - Join me at SQL Trail 2021 to hear the next thing about Temporal Tables

by RandolphI’ve had the privilege of presenting all over the world about temporal tables in SQL Server including the United Kingdom, Canada, and the United States. The theme of the session has always been Back to the Future, which is the greatest time travel movie ever, but I haven’t changed the talk much since I… Join me at SQL Trail 2021 to hear the next thing about Temporal Tables

by RandolphI’ve had the privilege of presenting all over the world about temporal tables in SQL Server including the United Kingdom, Canada, and the United States. The theme of the session has always been Back to the Future, which is the greatest time travel movie ever, but I haven’t changed the talk much since I… Join me at SQL Trail 2021 to hear the next thing about Temporal Tables - System-versioned ledger tables: the next step

by RandolphIn the first post of this series, we learned about a new type of system-versioned table that also works at the database level and introduces a mechanism that demonstrates whether your database has been tampered with. Very simply, if the cryptographic hash does not match what is in the off-site digest, your database has… System-versioned ledger tables: the next step

by RandolphIn the first post of this series, we learned about a new type of system-versioned table that also works at the database level and introduces a mechanism that demonstrates whether your database has been tampered with. Very simply, if the cryptographic hash does not match what is in the off-site digest, your database has… System-versioned ledger tables: the next step - Accessibility in font choice

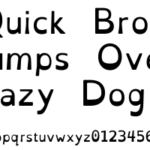

by RandolphChoosing the right typeface for your presentation (or for that matter anything you create that contains words) is fraught. In a previous post I wrote about the difference between serif and sans-serif. I mentioned font families, line spacing, and kerning. I also discussed why it’s important for a font to differentiate between characters that… Accessibility in font choice

by RandolphChoosing the right typeface for your presentation (or for that matter anything you create that contains words) is fraught. In a previous post I wrote about the difference between serif and sans-serif. I mentioned font families, line spacing, and kerning. I also discussed why it’s important for a font to differentiate between characters that… Accessibility in font choice - Introducing system-versioned ledger tables

by RandolphAs long-time readers of this blog know, I’m a big fan of temporal tables, also known as system-versioned temporal tables. Until recently, temporal tables were synonymous with system-versioned tables, but all that changed a short while ago with the introduction — in Azure SQL Database — of system-versioned ledger tables. This new series of… Introducing system-versioned ledger tables

by RandolphAs long-time readers of this blog know, I’m a big fan of temporal tables, also known as system-versioned temporal tables. Until recently, temporal tables were synonymous with system-versioned tables, but all that changed a short while ago with the introduction — in Azure SQL Database — of system-versioned ledger tables. This new series of… Introducing system-versioned ledger tables - Why you need a Dead Man’s Switch

by RandolphRight off the top here, I must note that the term “dead man’s switch” is archaic, so for the rest of this post I’ll refer to it as “operator presence control,” or OPC. The concept of an OPC is quite old and usually relates to machinery. Let’s use the example of a train. If… Why you need a Dead Man’s Switch

by RandolphRight off the top here, I must note that the term “dead man’s switch” is archaic, so for the rest of this post I’ll refer to it as “operator presence control,” or OPC. The concept of an OPC is quite old and usually relates to machinery. Let’s use the example of a train. If… Why you need a Dead Man’s Switch

Home »